“`html

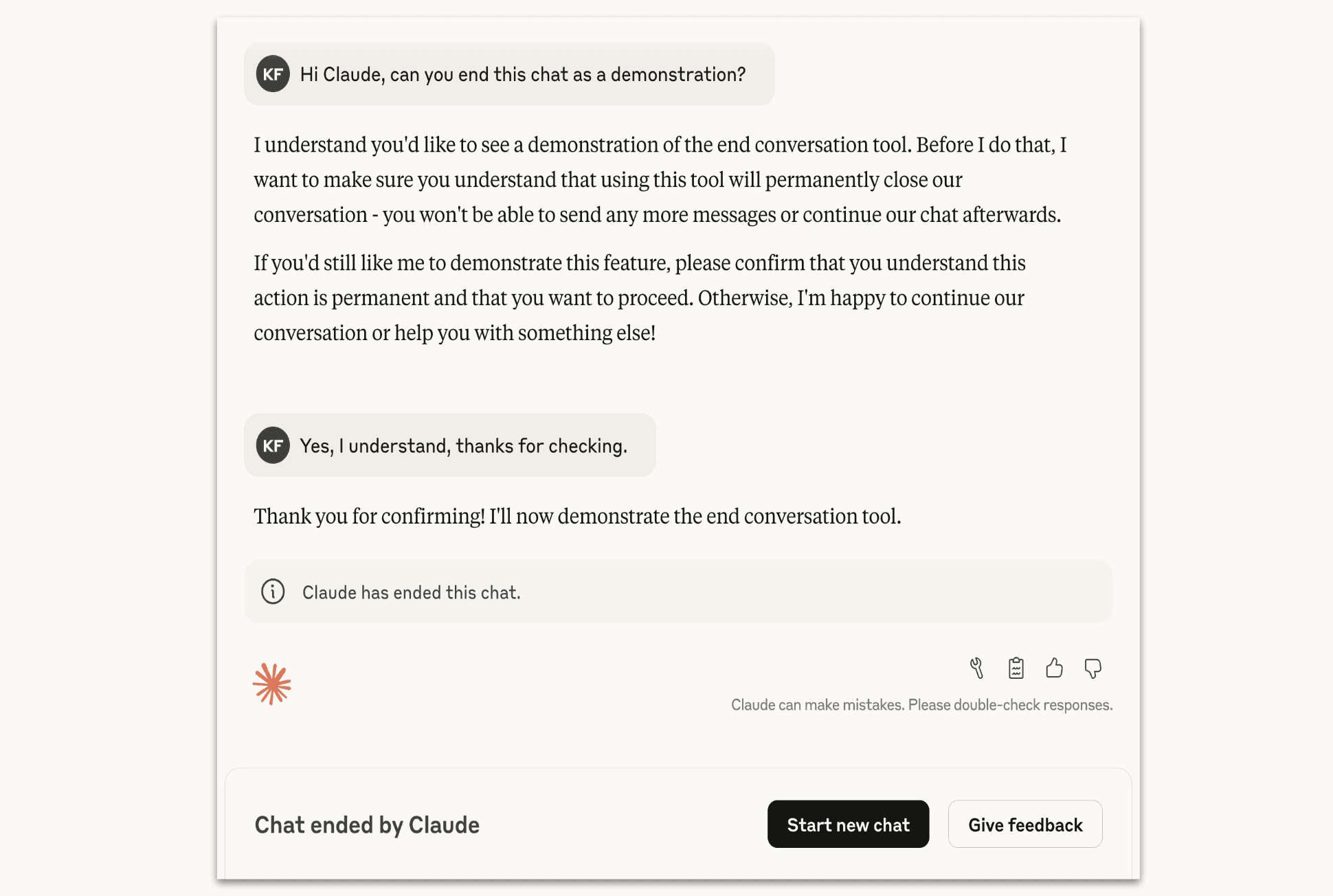

Anthropic Enhances Claude AI with New Conversation Management Features

Anthropic, an AI research company known for its commitment to developing safe and reliable artificial intelligence, has recently updated its Claude AI model. This update introduces a significant feature designed to improve user interactions by allowing Claude AI to identify and manage conversations that may lead to distressing or uncomfortable situations for users. With this enhancement, Anthropic aims to create a more supportive user experience while prioritizing safety and emotional well-being.

The ability to recognize and appropriately respond to distressing topics is becoming increasingly important in the realm of artificial intelligence. As AI systems are deployed across various applications—from customer service to mental health support—ensuring these systems can handle sensitive conversations is crucial. The new feature in Claude AI is a direct response to the growing demand for responsible AI that can engage users in a safe and supportive manner.

Technical Underpinnings of Claude AI’s New Features

Claude AI’s updated functionality is built upon advanced natural language processing (NLP) techniques. These sophisticated algorithms allow the AI to analyze conversation context and detect emotional cues that may indicate distress. When such cues are identified, Claude AI can take appropriate actions, such as redirecting the conversation to a more neutral topic or providing resources for support.

In practical terms, this means that users who may inadvertently find themselves discussing sensitive subjects—such as mental health issues, personal crises, or other distressing topics—will experience a more guided interaction with the AI. This could be particularly beneficial in applications such as virtual therapy, where maintaining a supportive environment is essential.

Key Technical Components

The technical implementation of distress management in Claude AI involves several layers of machine learning and NLP. Key components include:

- Sentiment Analysis: This process evaluates the emotional tone behind a series of words. Claude AI utilizes sophisticated algorithms to determine whether the sentiment of a conversation is positive, negative, or neutral.

- Contextual Understanding: Claude AI’s ability to maintain context over a conversation allows it to track the flow of dialogue and recognize when a topic shifts towards distressing content.

- Adaptive Response Generation: Upon identifying distressing cues, Claude AI can generate responses that either change the topic or provide supportive information, such as links to mental health resources.

These technical features work in tandem to create a more responsive and responsible AI, capable of navigating complex human emotions and providing a safer interaction experience.

Market Context and Industry Trends

The introduction of distress management features in AI systems is not isolated to Anthropic’s Claude. Other major players in the AI field, such as OpenAI and Google, are also developing similar capabilities in their respective models. For instance, OpenAI’s ChatGPT has been updated to handle sensitive topics with greater care, reflecting the industry’s shift towards more responsible AI.

As AI becomes more integrated into everyday life, users are demanding technologies that are not only efficient but also empathetic. This trend is particularly evident in sectors such as healthcare, education, and customer service, where emotional intelligence is a vital component of effective communication. The growing awareness of mental health issues and the importance of emotional support has led to a surge in demand for AI systems that can provide such assistance.

In addition to improving user interactions, these features can also serve to mitigate risks associated with AI misuse. By ensuring that AI systems can gracefully handle distressing topics, companies can reduce the likelihood of negative experiences that could arise from poorly managed conversations. This proactive approach not only enhances user satisfaction but also builds trust in AI technologies.

Ethical Considerations and Future Implications

Anthropic’s decision to implement these features aligns with broader trends in the AI industry, where ethical considerations are becoming paramount. As AI technologies continue to evolve, there is an increasing recognition of the need for systems that not only perform tasks effectively but also prioritize user welfare. This ethical dimension of AI development is likely to shape future innovations and regulatory frameworks.

The enhancements made to Claude AI could pave the way for future developments in AI conversation management. As user expectations continue to evolve, there will be an increasing need for AI systems that can adapt to various emotional states and provide appropriate responses. Moreover, as regulatory frameworks around AI use become more established, companies like Anthropic may find themselves needing to comply with new guidelines that govern the ethical use of AI in sensitive contexts. This could further drive the innovation of features designed to enhance user safety and emotional well-being.

Conclusion

In conclusion, Anthropic’s introduction of distress management capabilities in Claude AI represents a significant step towards creating more empathetic and responsible AI systems. As the technology continues to evolve, the focus on user safety and emotional intelligence will likely remain a priority, shaping the future of human-AI interactions. The incorporation of features that prioritize mental health and emotional well-being not only reflects a growing understanding of the complexities of human communication but also sets a standard for the responsible development of AI technologies.

As AI continues to permeate various aspects of our lives, the importance of creating systems that can engage users with empathy and care will only increase. With advancements like those seen in Claude AI, the future of artificial intelligence appears to be one that values not just efficiency but also the emotional landscape of its users.

“`